How AI and XR Are Complementary

My Insights & Takeaways From AWE 2025

XR & AIPERSPECTIVES

Introduction

Attending AWE (Augmented World Expo, the world’s leading XR conference) for the second time was a powerful full-circle moment for me. My first experience came in 2024, when our team won the XR Hack (now called XRAI Hack), which earned us the opportunity to showcase our IntelliSpace demo at both AWE EU 2024 and AWE USA 2025. Four years ago, when I decided to take a gap year, pausing my undergraduate studies to dive into XR, I never could have imagined this journey would lead me here. Of all the remarkable experiences I've had, what moved me most was finally meeting the XR pioneers I have followed on YouTube for years, and being able to express my gratitude to them in person.

I met the legend dev Dilmer Valecillos, whose tutorials have saved my life when I struggled with Unity and XR development.

Director of Liquid City, whose short film ‘Hyperreality’ has impacted the writing journey of my undergraduate thesis about virtual influencers (back in 2022!)

My passion towards XR, cultivated years ago, has extended into the field of AI since 2023. A talk by Meta at AWE EU 2024 helped me articulate a vision I'd been developing intuitively. Their key message that ‘AI and XR are complementary’ deeply resonated with me and sparked new enthusiasm around connecting my two favorite areas of technology. This year at AWE, I witnessed this vision manifest as more bridges (tools and products, pipelines and workflows, conceptual frameworks) are being built. There's a growing discourse about their convergence and the pivotal role this synergy will play in shaping our future.

‘AI and XR are complementary’ tagline at AWE EU 2024

‘AI needs XR’ tagline at AWE USA 2025

I’ve observed that in the XR community—at least in the US—AI now appears in nearly every conversation. However, this enthusiasm seems to flow largely in one direction. While XR professionals are increasingly curious and engaged with AI, I rarely hear AI professionals discussing how XR could benefit their field. Though AWE’s founder Ori Inbar delivered a compelling message that "AI needs XR", I didn’t see this notion elaborated further throughout the rest of the conference. As someone working at the intersection of both fields, I remain optimistic despite this imbalance. In fact, I’d like to share my own observations and perspectives on how AI needs XR to unlock its full potential.

This ‘Perspective’ essay weaves together my personal reflections, observations, and key takeaways from AWE, alongside an analysis of how AI and XR are becoming increasingly complementary. I'll explore not only how AI supports XR, but also how XR can enhance AI development. Please note that this essay is a work in progress and will be updated periodically with new insights.

The Synthesis of AI and XR

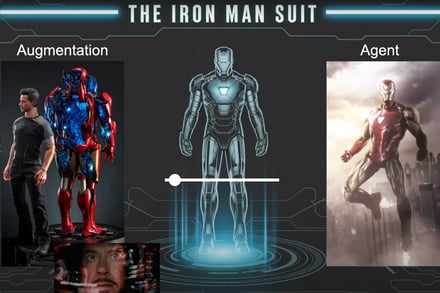

Andrej Karpathy's recent talk at Y Combinator's AI Startup School provided what I believe to be an applicable framework for understanding AI & XR synergy. He used an Iron Man analogy, describing how Tony Stark’s suit represents both augmentation and agency. It spans a spectrum from him being able to control it directly to the suit operating independently. Though Karpathy didn't elaborate extensively on the augmentation aspect, I would like to dissect it further:

XR as Extended Sensory Apparatus

The first dimension of the synergy lies in XR’s role as an augmented interface: AI agents operate under the hood, processing and interpreting real-world data, while XR serves as the interface that audits, enhances, and projects these interpretations back to our senses. In this dimension, humans typically remain in charge of operations and actions. One example is Iron Man's HUD (Heads-Up Display)—a real-time visual interface driven by a continuous feedback loop between human input (voice, eye tracking, gestures) and the AI digital brain.

We could also extend this beyond just visual interfaces to include auditory, tactilely, and other sensory modalities, where XR serves as our extended sensory apparatus mediating how we perceive reality. Think of it as AI (AGI or ASI or whatever) being the brain, with XR functioning as our enhanced eyes, ears, and bodies.

On the other end of the spectrum, if we slide the slider (see image above) from augmentation towards agency (as Karpathy envisions could happen within the next decade), we begin to imagine a future where the agentic and automatic mode is activated. In this scenario, the suit operates independently, with minimal to no human involvement. AI is able to perceive, think, and even to act, but how is AI capable of directing autonomous actions? What underlying capability enables an AI system to not just perceive but act meaningfully in 3D space?

XR as Spatial Intelligence Training Grounds

This brings us to the second dimension. The answer lies in what Professor Fei-Fei Li calls "Spatial Intelligence", which links perception with action. As She emphasized, "if we want to advance AI beyond its current capabilities, we want more than AI that can see and talk. We want AI that can do." This is precisely where XR becomes crucial for AI development itself. According to Reiners et al.'s systematic review of AI-XR combinations, XR environments serve as training grounds for AI systems, providing "infinite varieties of possibilities to learn to act" in 3D space. While LLMs have made AI more knowledgeable and conversational, they still lack the spatial awareness necessary for autonomous agency. XR provides the controlled, scalable environments where AI can develop this missing capability—learning to navigate, manipulate, and interact with spatial environments before deploying in the real world (Read more at the section 'XR for AI').

The Co-Evolution Loop

To bring these two dimensions as a whole, Meta's design lead Yiqi Zhao, envisioned:

|We're entering "an era of creation where AI becomes the digital brain, and XR turns the world into a medium."

I’d like to eleborate on this: The nature of this "medium" operates on two interconnected levels: XR serves as both a medium of interaction (how we engage with AI-enhanced reality) and a medium of training (how AI learns to navigate and act in spatial environments). This creates a powerful co-evolutionary feedback loop: XR generates rich training data and environments that develop AI's capabilities, while increasingly capable AI enhances XR experiences, making them more adaptive and responsive.

Each cycle potentially produces smarter AI and more sophisticated XR, driving both technologies toward a deeper level of synergy. In this framework, AI and XR actively co-evolve, with each advancement in one domain accelerating progress in the other. This is what I believe that represents the true synthesis of AI and XR: not merely two technologies working together, but two technologies fundamentally reshaping each other's development and capabilities.

AI For XR

AI-Powered Asset & Code Generation for XR

As both an XR developer and producer with a comprehensive understanding of the XR production pipeline, I say if building XR experiences is like constructing a house, then 3D assets are like the bricks, of which the properties such as their colour, material and texture characteristic, while coding serves as the construction process that determines how everything is assembled and functions. AI is revolutionising both: the bricks we use (3D assets) and how we build with them (code generation).

Today, AI can generate almost every aspect of asset needed in XR develpment, from meshes, textures, and skyboxes to character models, avatars, sound effects, and visual effects. Meta's recent announcement that AI generation of entire Horizon Worlds is coming "very soon" showcases how far this transformation has progressed! We're moving beyond individual asset creation to the generation of entire virtual environments.

Wile much of the focus has been on asset generation, I am more keen on highlighting AI's equally significant impact on code generation for XR development. Two standout talks at AWE 2025—Terry Schussler’s “Vibe Coding for XR” (*you can find his pdfs here and the recording here) and Preston Platt’s “AI + XR: Building a Multiplayer, Cross-Platform XR Game in One Week” (*you can find the recording here)—demonstrated novel AI-powered coding workflows that are reshaping how XR experiences are designed and developed. My key takeaways include:

Tool Stack and Workflow: Terry showcased a "vibestack" combining IDEs like Windsurf and Cursor with AI reasoning models (SWE-1-lite, GPT-4o) and generative AI tools including Stable Diffusion, ElevenLabs, and Blockade Labs (for skybox generation). This multi-tool approach enables rapid prototyping where AI handles 95% of tedious coding work while developers focus on creativity and logic. The approach transforms developers from programmers into "experience architects."

Practical Limitations: Terry also addressed vibe coding's limitations. It requires extensive iteration to achieve quality results, AI-generated code isn't perfect and needs debugging, deployment still requires traditional programming expertise, and the approach works best for modern frameworks and small-to-medium applications. High-security or mission-critical systems remain unsuitable for this methodology.

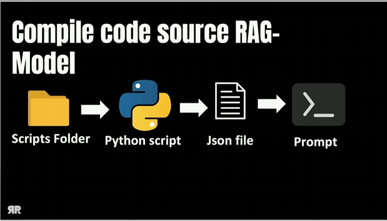

Preston introduced a pipeline that elevates AI as a vibe coding tool to a project specific intelligence assistant. His pipeline centers on Retrieval-Augmented Generation (RAG), an AI technique that enhances LLMs by giving them access to external knowledge. Instead of relying only on training data, his pipeline transforms generic AI assistance into project-aware development support through three steps (see below). The benefit of this is that AI-generated code can integrate seamlessly with existing systems, maintains consistency with established patterns.

A Python script systematically scans all Unity scripts in the project, extracting code patterns, functions, variable names, and networking requirements. It converts the entire codebase into structured JSON data, creating a searchable knowledge base.

When converting scripts to networked code, the system searches this JSON knowledge base to find relevant patterns—similar scripts, networking examples, and variable types, etc.

Instead of asking ChatGPT generic questions like "Make this Unity script networked," the RAG system provides augmented prompts: "Make this Unity script networked. Here's the existing codebase context: [Retrieved JSON data with relevant networking patterns, variable naming conventions, existing MonoBehaviour structures, etc.]"

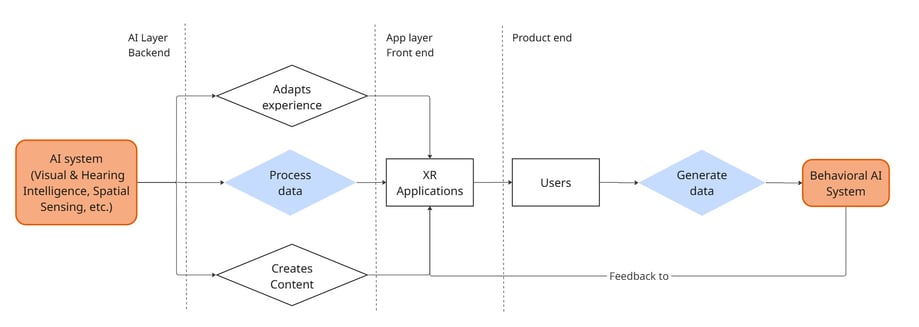

The way I see AI empowering XR is illustrated in the following flow diagram: AI systems provide the underlying intelligence for visual and auditory processing (currently the most feasible and widely adopted modalities), spatial sensing, and content generation. These capabilities enable XR applications to adapt user experiences in real time and generate dynamic, context-aware content. On the user side, interactions within these applications produce behavioral data, which in turn feeds back into the AI system—creating a continuous learning loop. I will focus on the content creation component of this framework below. The experience adaptation and behavior-driven AI aspects will be explored in future writing as my understanding of these complex areas continues to evolve (So stay tuned!).

XR For AI

This section outlines several cases supporting a perspective I believe is often overlooked: XR unlocks the potential of AI and XR's contributions to AI advancement deserve equal attention. Those who question the notion of “AI needs XR” likely view AI primarily through the lens of LLMs. However, as AI evolves toward Spatial Intelligence (the ability to understand, reason, interact, and generate 3D worlds), I argue that XR will become essential. Why?

The team at nunu.ai transferred one of their AI agents—which had been trained solely in virtual environments—to a real-world robot body, and it was able to navigate successfully. Source: Jan Schnyder on X

The success of virtual-to-real transfers provides another case. Companies like nunu.ai have demonstrated that AI agents trained exclusively in virtual game environments can successfully operate real-world robot bodies. As co-founder Jan Schnyder notes, "Starting in gaming gives us a massive head start. Game environments allow us to build agents and perform actions for which we would have to wait several years still to do in the real world."

One of the core challenges in building spatially intelligent AI is the scarcity of spatial data. Unlike language, which has been documented for centuries, the training data for Spatial intelligence is sparse and fragmented. This is precisely where XR plays a transformative role. XR environments offer something the real world cannot: scalable, controllable, and richly interactive 3D simulations. These synthetic environments can either replicate real-world physics or push the boundaries of what’s possible, providing fertile ground for generating the type of high-quality spatial data that AI systems require.

In Professor Fei Fei Li's recent talk at Y Combinator, she expressed excitement for the metaverse despite current limitations on the hardware side. Although she didn't go into detail about how her startup, World Lab, is training Large World Models (LWMs), it’s incredibly encouraging to see such a prominent AI expert recognise XR’s promising potential.

The Spatial Data Challenge

Once case is Meta Reality Labs’ SceneScript research which provides a novel method for reconstructing environmrnts and representing the layout of physical spaces. Rather than train on limited real-world data while preserving privacy, the SceneScript team created Aria Synthetic Environments—a synthetic dataset comprising 100,000 completely unique interior environments, each described using SceneScript language and paired with simulated walkthrough videos. This demonstrates how XR simulations can supply the volume, consistency, and variation needed to train AI systems for spatial recognition.

Some Practical Cases

Closing Speech

As stated on AWE's "The AI + XR Imperative" page, the future won't belong to either AI or XR—it belongs to both. AI is intelligent but disembodied; XR is immersive but requires intelligence to thrive. AI needs XR to perceive and interact with the physical world, while XR needs AI to make experiences better, faster, and more accessible. With the combined power of these cutting-edge technologies, we must also embrace a shared responsibility that comes with such transformative capability.

Canadian Philosopher Marshall McLuhan once prophesied:

"I expect to see the coming decades transform the planet into an art form; the new man, linked in a cosmic harmony that transcends time and space, will sensuously caress and mold and pattern every facet of the terrestrial artifact as if it were a work of art, and man himself will become an organic art form."

This quote has always been my life motto—something I strive to interpret and live by. As we stand at the convergence of exciting technologies, I believe it's important to reflect on the ethical implications of these technologies, especially through the lens of transhumanism. In my future essays, I’ll attempt to weave in some of these thoughts and explore how we can build responsibly, ensuring our digital tools serve to enhance, not diminish, our shared humanity.

References

https://www.awexr.com/ai-loves-xr

https://www.businessinsider.com/world-model-ai-explained-2025-6

https://speedrun.substack.com/p/the-rise-of-the-ai-companion

https://speedrun.substack.com/p/14-big-ideas-for-games-in-2025

https://www.projectaria.com/scenescript/

https://www.frontiersin.org/journals/virtual-reality/articles/10.3389/frvir.2021.721933/full